Architecture & Technical Details

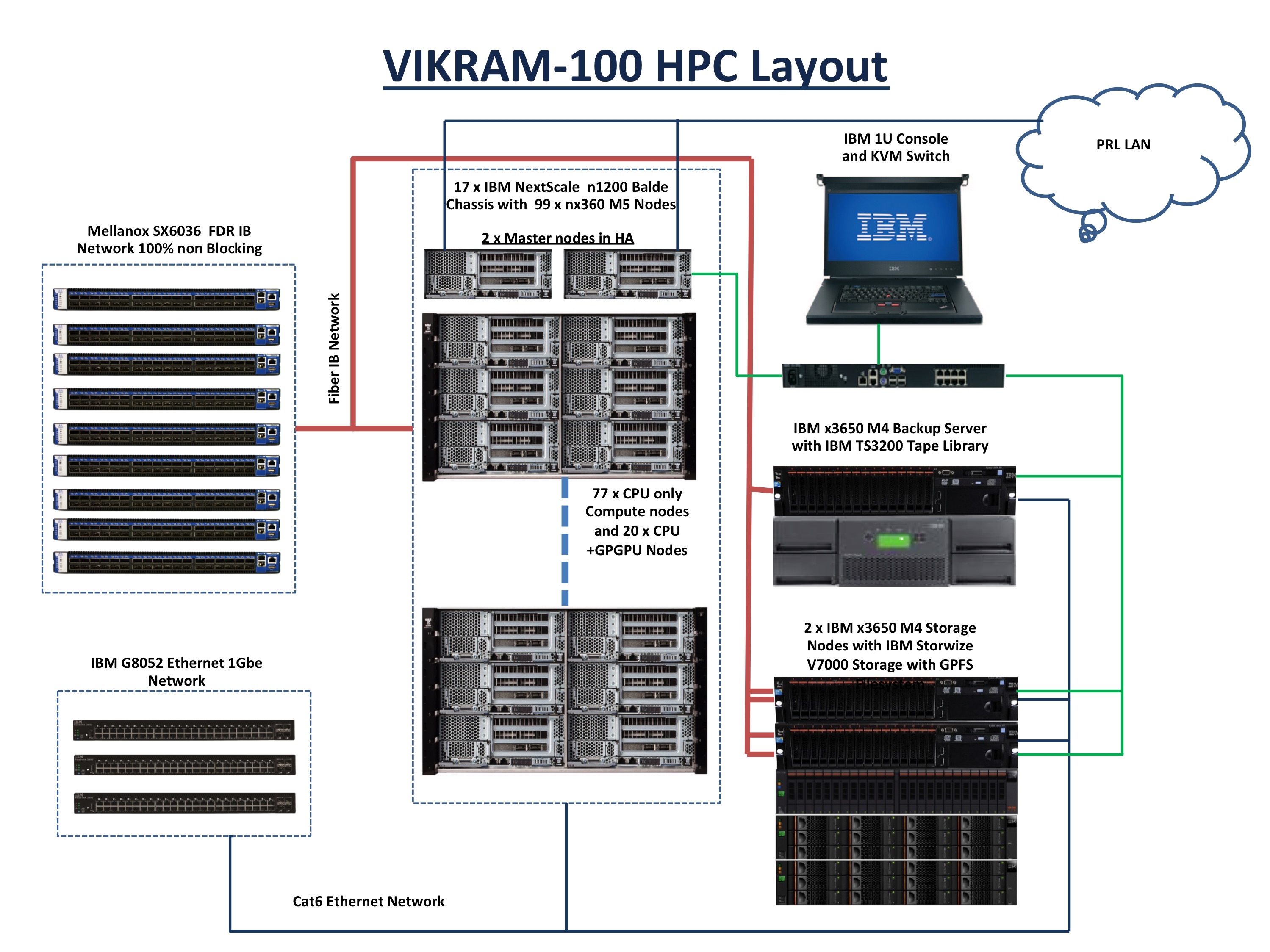

The Vikram-100 has 97 compute nodes, each with two Intel Xeon E5-2670v3 12-core Intel Haswell CPUs at 2.30 GHz, 256 GB RAM and 500 GB of local scratch storage. 20 of these nodes also have two Nvidia Tesla K40 GPU cards each card capable of 1.66 Tflops (double precision) . The HPC has a global high performance parallel filesystem based 300 TB storage that is shared across all nodes.

Vikram-100 has a internal primary 100% non-blocking FAT Tree Topology FDR (56 Gbits/Sec) Infiniband Network used for inter process communication between compute nodes and for global shared storage. In addition, it has a internal secondary Gigabit Ethernet network for management and backup purposes. It has two High Availability (HA) master nodes where users login to compile, debug and submit jobs. These two nodes constantly 'ping' each other, and if any of them goes down, the other one takes over to provide uninterrupted service to PRL users. Users data is regularly backed up onto latest generation LTO6 tapes on a 4 drive, 48 slot tape library.